The event began with a welcome address by Jörg Mühlhans, head of the MediaLab, setting the stage for an interdisciplinary dialogue.

Felix Klooss from the University of Vienna kicked off the presentations with a talk titled “Augmented Reality in Musicology,” focusing on the use of augmented reality (AR) in dissecting the interactions between musicians and their environments. By separating visual and auditory elements, AR offers a clearer understanding of how these factors influence performances. Klooss, a predoctoral researcher at the MediaLab, highlighted the potential of AR for advancing music research.

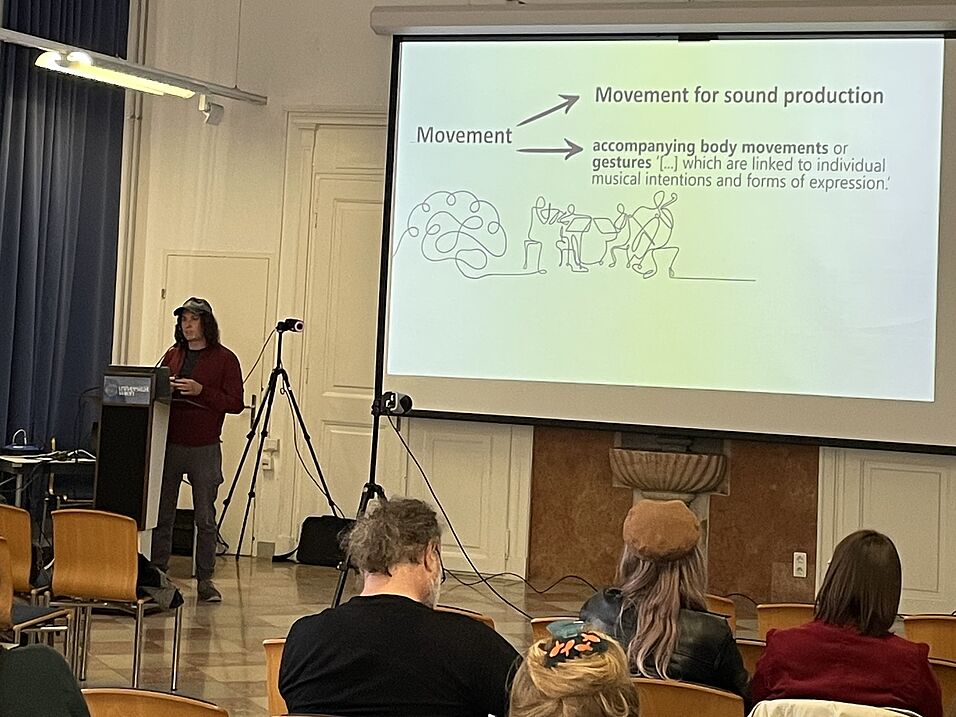

Next, Laura Bishop from the University of Oslo presented “Coordination of Bodily Rhythms and Experiences of Musical Togetherness in Ensemble Playing,” exploring how musicians use body movements to create a sense of unity and “musical togetherness.” Her research, grounded in cognitive and experimental psychology, examined the role of body rhythms in ensemble playing, particularly how musicians use physical cues and emotional synchronization to coordinate performances.

Christoph Reuter from the University of Vienna followed with “Data Science with Tools from SInES.” He introduced online tools available at the Space for Interdisciplinary Experiments on Sound (SInES), which can capture and process body and facial movements, emotions, and audio features from video and audio files. These tools allow for a wide range of data analysis in platforms like Excel and Matlab, providing new opportunities for interdisciplinary research in music and motion studies.

The event concluded with a live demonstration of the motion-capture system, where participants witnessed how body movements are translated into data, including challenges like “ghosts and gaps” in data preparation. The evening ended with a buffet and networking session, giving participants the chance to connect and discuss the day's insights.